This post presents a correction of the following Reddit post. While the main claims and conclusions of the original post are correct, the accuracy claims were affected by overfitting issues.

TLDR: When I posted the Reddit post and for 2 days after, the true accuracy was 52% and the model was too confident, giving extreme predictions. The model is now fixed, and the accuracy is around 55% (as of April 4 2025), the model also gives more reasonable predictions. Thanks to user /u/Impossible_Concert88 for trying to verify the accuracy claims, which led me to discover this bug.

Bug description

So why was the accuracy wrong? The issue came from the data collection process, instead of collecting data from 4 regions, it was actually only collecting from EUW, but marking some as coming from KR, OCE and NA. This basically means that almost all matches were duplicated 4 times.

This is a problem, because you are supposed to separate train data and test data. But here, because the original dataset was containing duplicates, even after splitting the data into 2, most rows were present both in train and in test data. When this happens, it is not possible to detect when the model overfits, which means that the model memorizes match outcomes, instead of learning general patterns.

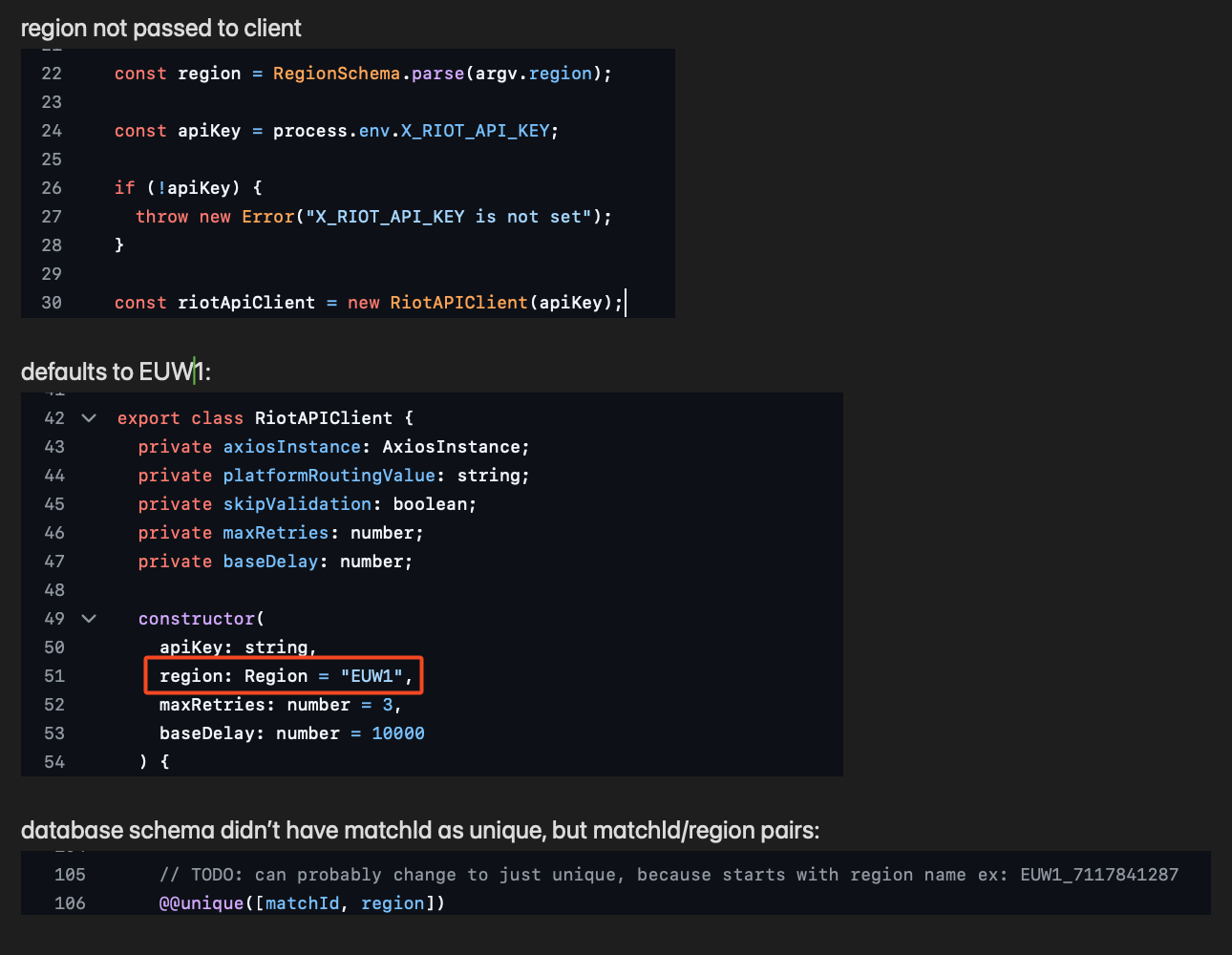

Why exactly the data from EUW was marked as coming from KR, OCE and NA? This is because of a silly mistake. I created a Riot API client that can be passed the region when created. However, the region would default to EUW1 if no region was passed. And then in the data collection code, I forgot to specify the region. Furthermore, there was no constraint in the database to make sure the matchID is unique.

Code mistakes

Here are the lines of code that caused the mistake:

Bug resolution

I quickly fixed the bug that duplicated the rows, and uploaded a fixed version of the model on April 4, around 2 days after my Reddit post. This new model has an accuracy of 55%. Data collection was also fixed, so perhaps after gathering more data from other regions, the accuracy will improve. I will also be rerunning experiments to see what model architecture works best, since previous experiments were biased.

Conclusion

I am sorry about the mistake in the Reddit post, in the future I will create a simple script that lets anyone verify the model accuracy. I will also try to improve the model accuracy from 55%, but it is unknown what the actual ceiling is, my guess is that it is a few percent more than 55%, but 62% might be impossible, because draft is just a small part of the game.

Further details for technical readers

The loss of the new model is of 0.684 for the win prediction task, which might seem high for a binary classification task (always guessing 50% win chance would lead to a loss of 0.693). But considering that the task is really hard and noisy, it's hard to say. I will create in the future a game to let users predict outcomes, to achieve a baseline to compare the model to.

I will also try to provide more metrics and verification methods for the model. As an additional validation of the new model, here is a table of accuracy buckets. This evaluation works by splitting the validation predictions into buckets, based on the model prediction. Then we check if on average the team that is predicted to have a win chance of 60% actually wins 60% of the time. The results show that the model is well calibrated.

| Bucket | Num Samples | Accuracy | Expected Accuracy |

|---|---|---|---|

| 50-55% | 428,768 | 0.524853 | 0.525 |

| 55-60% | 271,010 | 0.573794 | 0.575 |

| 60-65% | 78,046 | 0.635753 | 0.625 |

| 65-70% | 4,807 | 0.712919 | 0.675 |

| 70-75% | 386 | 0.797927 | 0.725 |

| 75-80% | 87 | 0.816092 | 0.775 |

| 80-85% | 41 | 0.878049 | 0.825 |

| 85-90% | 32 | 0.937500 | 0.875 |

| 90-95% | 2 | 1.000000 | 0.925 |