LoLDraftAI uses a custom trained deep learning model to predict draft outcomes. This model is trained on millions of games, which allows it to have in depth understanding of champions and game dynamics. Because the model has to be trained fully, which takes a few hours, it is only updated every few days.

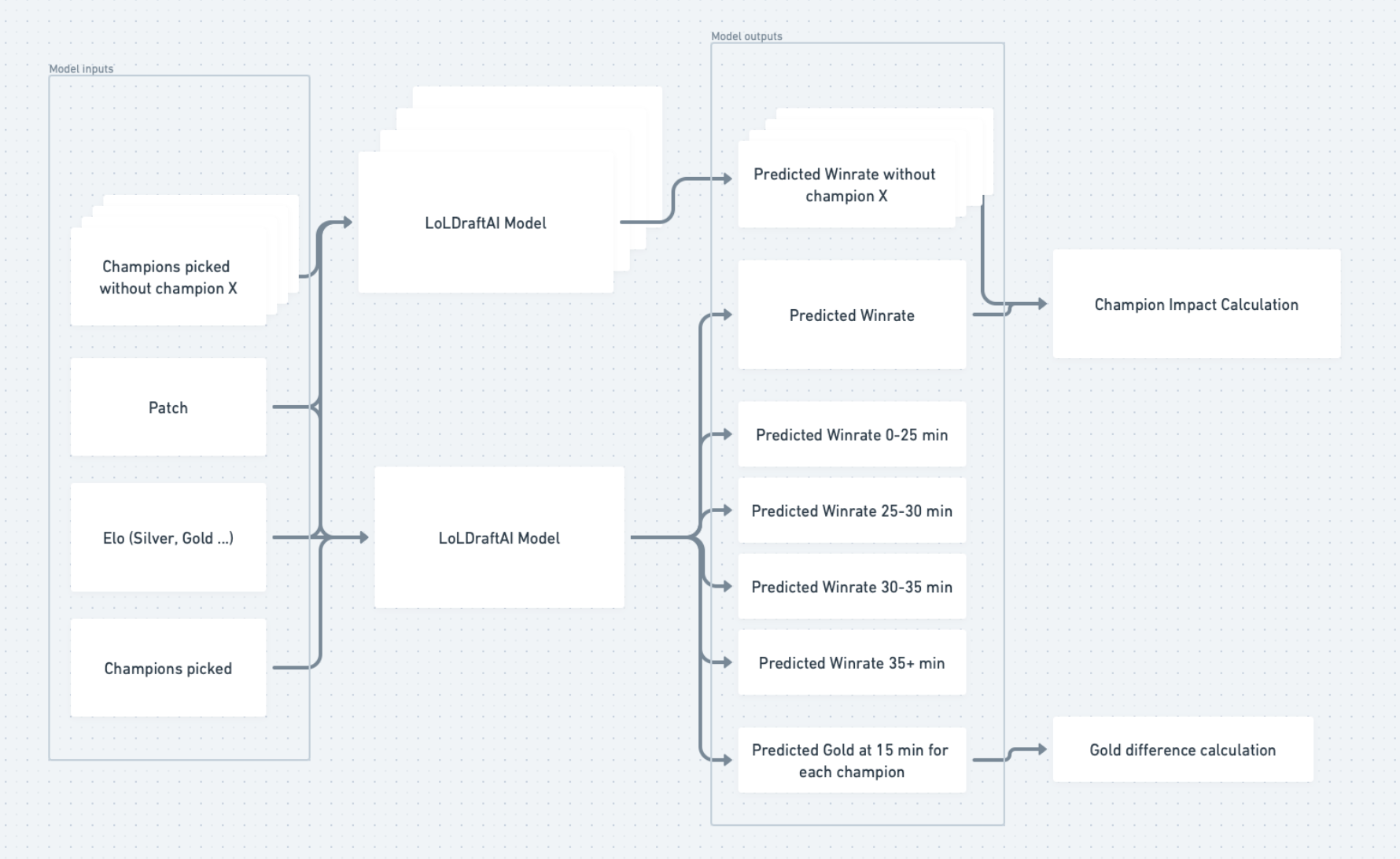

Model architecture

Model inputs

The model takes as input only takes as input the:

- champions played

- patch

- elo

Because the model is trained on millions of games, it doesn't require statistics in the inputs. Instead, through the deep learning training process, the model will learn and internalize champion statistics by itself.

Model outputs

The same single model outputs the following:

- winrate

- winrate if the game ends during 0-25min, 25-30min, 30-35min or 30+min timeslots

- gold value at 15min for each champion(which is then used to calculated gold difference at 15min for each matchup).

Because all these outputs come from the same model, we are sure they are consistent with each other.

Figure 1: LoLDraftAI model inputs and outputs

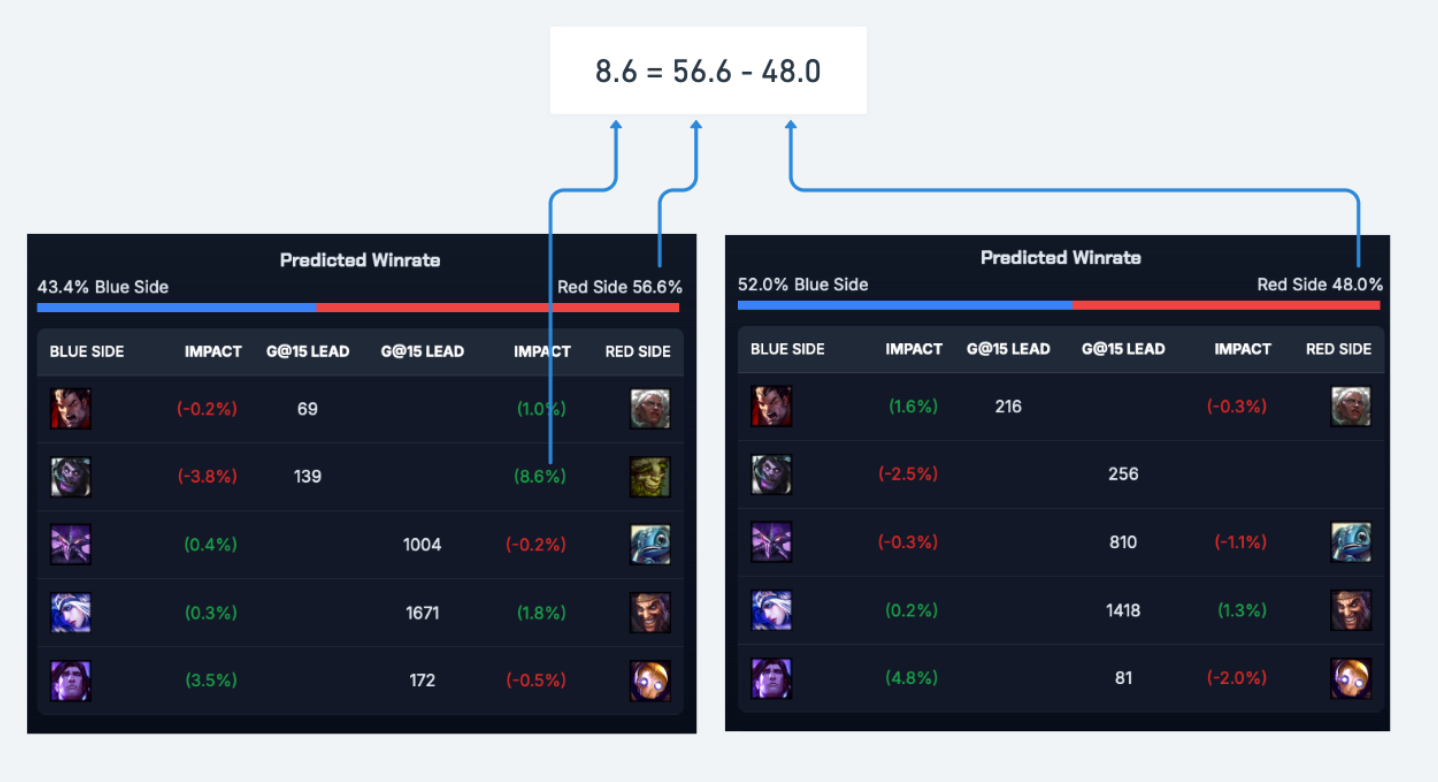

Impact is a metric that is shown in analysis but not directly a model output, instead it is a calculated metric. It is the difference in winrate between the draft with a champion and the draft without that champion. For example, in this draft Ivern increases his team win chance by 8.6%, meaning that if with Ivern the winrate is of 56.6%, then the winrate of the team with the "average" jungler would be 56.6-8.6 = 48.0%.

Impact gives you a sense of how much each champion improves their team winrate in the final draft. The fact that it is on the final draft is important, there can be picks that could have been good at the time they were picked (early in the draft phase), but then end up with negative impact because they got countered or because the ally team picked anti synergies.

Figure 2: Impact calculation example

Champion suggestions

Champion suggestions are simply done by evaluating a separate draft with each of the champions to suggest, and then ordering them by highest winrate.

How does the model predict incomplete drafts?

For incomplete drafts, the model receives a champion list with some champions marked as UNKNOWN, which is a special token that represents missing champions. During training, the model learns incomplete drafts by having drafts from real games randomly masked. We mask randomly because the riot data doesn't have information about the original pick order.

However, there is a theoretical flaw in this random masking method. Because it cannot consider the original pick order, this means the model is not aware of "blind pickability".

To illustrate this, let's imagine a hypothetical scenario in which there is a champion called KILL_NAUT, that has 99% pick rate and 100% winrate against Nautilus, but 1% pick rate and 0% winrate against anything else. The model would then learn that in incomplete drafts where KILL_NAUT is present, the ally team has 99% chance of winning, so it would suggest blind picking KILL_NAUT.

While this flaw exists in theory, in practice it is not so problematic, because there is no scenario as extreme as this KILL_NAUT example, for a few reasons:

- People often blind pick situational and counter-able champions like Kassadin or Malphite

- People don't perfectly counterpick champions

- Riot balances the game by aiming 50% winrate for champions, there are no champions with effectively 99% winrate

However it is better to be aware of this potential flaw. What this means in practice, is that in situations when enemy team is mostly unknown, you should not pick a LoLDraftAI suggested champion you are not comfortable blind picking.

Pro play model

The LoLDraftAI pro play model is trained by fine tuning the solo queue model. Fine tuning means that the fully trained solo queue model is taken as a starting point, and is then trained on the pro play data. This allows the pro play model to use the "concepts" learned by the solo queue model, which improves performance compared to only using the limited data available for pro play.

The pro play model takes the same exact inputs as the solo queue model, meaning it doesn't take into account teams strengths. It is also trained on games from all pro play regions(minor and major), to use the maximum amount of available data.

This approach also has a few potential flaws:

- Because the model is trained on all regions, predictions might be slightly biased towards the more "average" and lower level teams, just because these teams have more games. This could impact predictions, for example maybe Nidalee is a bad overall pick in pro play, but not when played by one of the top 5 teams in the world.

- The KILL_NAUT issue explained in the "How does the model predict incomplete drafts?" section is more valid in pro play, because in pro play there are actually champions that are only used in specific situations, which might mean these champions have inflated winrates but are not blind pickable.

- If there are some champions that are only played by specific teams, their winrate might be artificially impacted by the overall team level. For example if a top 3 team in any region picks up Teemo, then Teemo will automatically have a high winrate, because that team is just better than the competition, not necessarily because the pick is good. In practice however, there is not a lot of team specific picks in pro play, as most teams copy what is successful.

To sum up most of these issues exist because of the limited data in pro play, so there is no real solution to them. In pro play, it is likely that a machine learning model will always have to be used as a suggestion mechanism, but final draft evaluation will be done by humans.

Model results

The code for LoLDraftAI is open source and available on GitHub as of May 2025 version.Since this public version, some features were added but the general methodology remains the same.

The training logs for the May 2025 model are also public and available here:

The training logs showcase that the final solo queue and pro play models achieve an accuracy of 56%, meaning on random drafts they have not been trained on, the models can call the correct winning team 56% of the time.

Note that there is no telling if this is the theoretically best result, because it might be that the majority of drafts are mostly 50/50 and thus the accuracy can't really be higher than this. Furthermore draft is only a small part of solo queue, with many games being decided simply by which team has a smurf or griefer.

A more relevant evaluation of the model is bucketed accuracy, this evaluation method takes the predictions the model does on the games it has not seen, and puts them in different buckets of confidence ranges. For example if the model predicts the winrate is 49% blue team and 51% for red team, this would go in the 50-52% bucket, and if the red team ends up winning, the model would be considered "accurate".

If the model is well calibrated, we expect it to be right 51% of the time when it predicts a team has 51% win chance. And it's what we can observe with the LoLDraftAI model:

| Confidence Bucket | Number of Games | Model Accuracy | Expected Accuracy |

|---|---|---|---|

| 50-52% | 1,206,459 | 0.513559 | 0.5125 |

| 52-55% | 1,069,927 | 0.537054 | 0.5375 |

| 55-57% | 837,552 | 0.564636 | 0.5625 |

| 57-60% | 586,352 | 0.591524 | 0.5875 |

| 60-62% | 367,006 | 0.617445 | 0.6125 |

| 62-65% | 206,157 | 0.648166 | 0.6375 |

| 65-68% | 104,687 | 0.669988 | 0.6625 |

| 68-70% | 48,145 | 0.701070 | 0.6875 |

| 70-72% | 20,691 | 0.725388 | 0.7125 |

| 72-75% | 8,788 | 0.754552 | 0.7375 |

Table 1: Model accuracy results as of 4th September 2025

This can also explain why the overall accuracy is 56%, because the model sees most drafts as 50/50. Rare are the drafts that give a team more than 60% winrate, but when using the LoLDraftAI champion suggestion, you can find these drafts more easily.

Conclusion

The LoLDraftAI model architecture is simple and transparent. This is what makes it effective and trustworthy, we simply use the large data available for League of Legends, and apply modern Deep Learning techniques.